Make AI Science Agents More Human

The work of a human scientist usefully involves things that don’t look like science

We write Reinvent Science to broaden the conversation around science and science funding, and we rely on you to help us reach as many readers as possible. Please support our work by subscribing and sharing.

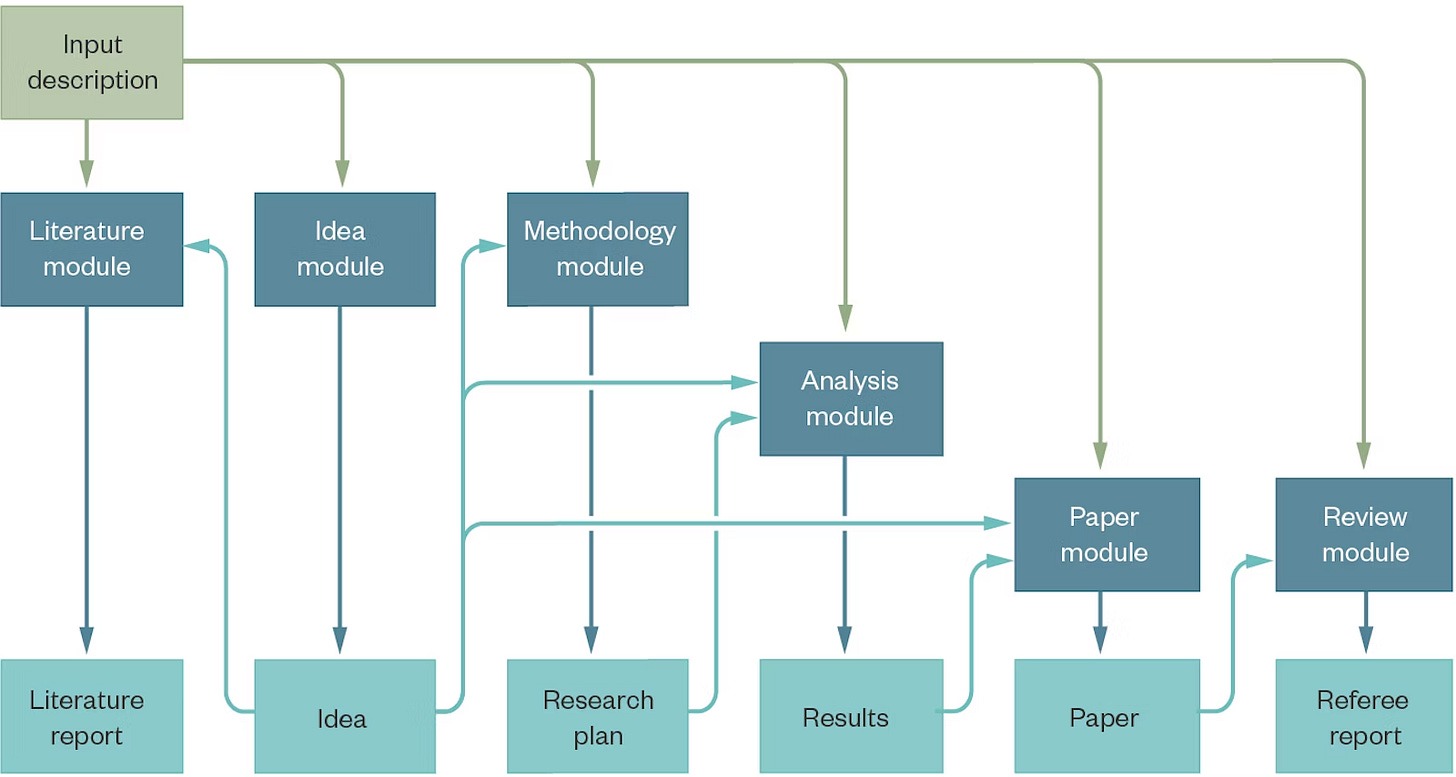

We think that current AI agents for science are making a major mistake: getting their outer loop wrong. Take Denario for example: it organizes its agents around core activities and outputs. It’s competitor Kosmos does much the same (with more agents of fewer types) while incorporating a world model that serves as a sort of shared memory for its agents.

Structure of the Denario system from this blog post

This, however, is a poor model of how a scientist structures their work and an even worse model of a collaboration between multiple scientists. In an early post we laid out an alternate formulation of the scientific method that we believe is a good model of real research. It consists of a fully connected graph with 7 nodes/states that a scientist can occupy.

Choose: decide what to work on; taste helps.

Observe: see something in the world, be curious about it.

Describe: formalize what you see so that someone else can know it when they see it

Distill: reduce your description to only what matters for the thing you’re interested in

Predict: Know what will happen before it happens

Control: Make specific things happen when you choose

Extend: Make new things happen when you choose

To date, scientific AI lives nearly entirely in Distill and with shades of Describe and Predict. For ensembles of AI agents to make meaningful contributions they will need to be able to cover all 7 nodes. Where are the AIs for deciding what to work on? For observing the world with curiosity and describing it in detail? If they exist they’re not being used for science. Controlling and Extending phenomena will be harder since they will require control of experimental apparatus, but teams are hard at work on that. We recommend that scientific AI have a concept of these 7 nodes (or something like them) and explicitly transition between them when working.

In addition, the actual outer loop of a human scientist usefully involves things that don’t look like science at all. What if a scientific AI agent could take a break to watch Youtube or read a novel? Would it make random useful connections like humans do? Should we create an equivalent to sleep so that the agent can wake up with insights? Interesting things happen if you give Claude Code a “private” diary. What human-like behaviors might improve the performance of science AI agents?

LLMs will not be able to write decent papers by themselves until they really understand Peter Medawar's paper 'Why the scientific paper is a fraud'.